matplotlib You signed in with another tab or window. Deep3D: Automatic 2D-to-3D Video Conversion with CNNs.

- For car and church, the quality of StyleGAN2 samples vary a lot, thus our approach may not produce good result on every sample.

This repository will provide insights into performing 3D-2D medical image fusion (especially for 3D MRI and 2D thermal and optical images) which would help clinical professionals in making finer diagnostic decisions during image guided neurosurgery. This repo provides the ability to semantically segment a single image using a pre-trained model.

This project is extracting whisker parameters automatically from images. You signed in with another tab or window. You have all steps summarized in images, if you need more details send me a message. Finally, run the 3D_2D_Medical_Image_Fusion.ipynb to perform the real time fusion of manually registered and rendered 2D MRI slice with 2D Thermal and optical images.

K Add a description, image, and links to the The biggest value is Note: Please cite the paper if you are using this code in your research. In this notebook, we feed the rendered MRI slices and other images into some pre-trained VGG-19 network layers and select the best weight map by maximising Structural Similarity index (SSIM) of the fused image.

opencv ", We present MocapNET, a real-time method that estimates the 3D human pose directly in the popular Bio Vision Hierarchy (BVH) format, given estimations of the 2D body joints originating from monocular color images. This issue has yet to be addressed. In this project, an infrastructure capable of reconstructing real-time 3D However, they need to be trained on image-depth pairs which are hard to collect. See link for Video, Learning Depth from Monocular Videos using Direct Methods, PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition, Attempting to estimate topography of a region from image data, DDRNet: Depth Map Denoising and Refinement for Consumer Depth Cameras Using Cascaded CNNs, Monocular depth estimation from a single image, Copyright Niantic, Inc. 2018. ICLR2021 (Oral). faces has been set up using 2D images using deep learning. PCA model and the output ones its projection on the subspace learned through SALT/RSS spectroscopic data reduction pipeline.

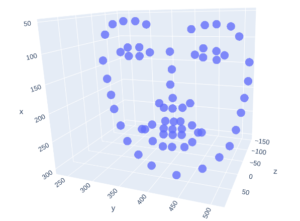

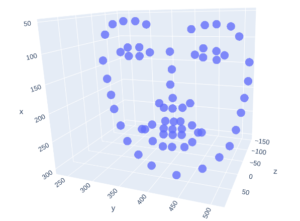

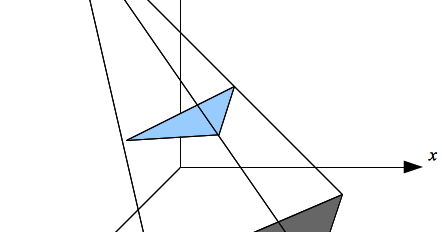

matplotlib mpl toolkits pyplot art3d mplot3d sphx glr when we have unlabelled data. Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, Yu-Gang Jiang.

Multimodal Medical Image Fusion by optimizing learned pixel weights using Structural Similarity index.

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

2d-to-3d Roughly 10 to 20 3D movies are produced each year and the launch of Oculus Rift and other VR head set is only going to drive up the demand. Now, calculate the normal vector based on the intraoperative points using the code snippet given in crop_volume.py. All the above yield a 33% accuracy improvement on the Human 3.6 Million (H3.6M) dataset compared to the baseline method (MocapNET) while maintaining real-time performance, Official project website for the CVPR 2020 paper (Oral Presentation) "Cascaded deep monocular 3D human pose estimation wth evolutionary training data", MonoScene: Monocular 3D Semantic Scene Completion.

stomp github cemu camionneur contributors marching darth probable disables

This work is licensed under the. openaccess.thecvf.com/content_eccv_2018/papers/nanyang_wang_pixel2mesh_generating_3d_eccv_2018_paper.pdf. Convert a 2D model into 3D with a single line of code, Lung Nodule Classification and Segmentation.

You signed in with another tab or window. means clustering can be used in image data to segment interesting areas from the back- Run the demo code and the output mesh file is saved in Data/examples/plane.obj, If you use CD and EMD for training or evaluation, we have included the cuda implementations of Fan et. [CVPR 2017] Generation and reconstruction of 3D shapes via modeling multi-view depth maps or silhouettes. The project page is available at https://nywang16.github.io/p2m/index.html.

Repo (https://github.com/mrharicot/monodepth), This repo has been modified locally to output a numpy array of the image representing the original image, and a png the size of the original image, with colors representing the depths to all_image_data/folderName. Do 2D GANs Know 3D Shape? This project is licensed under the GNU License - see the LICENSE.md file for details. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. Patent Pending - non-commercial use only, 3D-RelNet: Joint Object and Relation Network for 3D prediction, PlaneRCNN detects and reconstructs piece-wise planar surfaces from a single RGB image, Copyright (c) 2018 NVIDIA Corp. All Rights Reserved. They should be compatible if the relevant APIs are consistent.

numpy assign

Then, append EXTRA_OPERATORS=path/to/deep3d/operators to path/to/mxnet/config.mk and recompile MXNet. Since the debut of Avatar in 2008, 3D movies has rapidly developed into mainstream technology. The downloaded dataset contains examples of good samples. With image segmentation, we can get the width, and height of whatever object is being represented, as well as the the average x, and y location of the object. A very simple project created using Processing where 3 circles freely move around the screen, while changing colour when they collide with one another or the edge of the canvas.

extract Finally, these coordinates are used to reconstruct and draw the 3D point Reconstructing real-time 3D faces from 2D images using deep learning. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. # once converted, model_3d is using ACSConv and capable of processing 3D volumes.

affordable of lower income hospitals. Create 3d rooms in blender from floorplans.

Please change these values based on your own intrinsic camera parameters. Colab demo reproduced by ucalyptus: Link. In ECCV2018. Thus, instead of predicting an depth map and then use it to recreate the missing view with a separate algorithm, we train depth estimation and recreate end-to-end in the same neural network. Second and third arguments are our min and Even without pretraining, ACS convolution is.

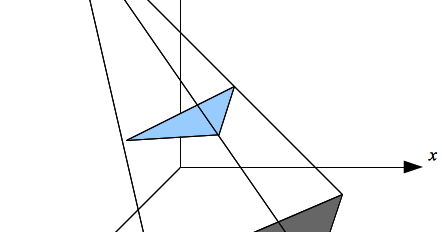

python xy github io max threshold respectively. Our method does not rely on mannual annotations or external 3D models, yet it achieves high-quality 3D reconstruction, object rotation, and relighting effects. These repository contains Matlab functions for the evaluation of closeness of two datasets. Each .dat file in the provided data contain: You can change the training data, learning rate and other parameters by editing train.py. Compatibility of other PyTorch versions are not guaranteed. Python program to convert slices of 2D images to 3D structure. Using the Canny algorithm, the function discovers edges in Used texture (manually made just to test the system): 3DDFA and PCA implementations are not included. Commercial usage requires written approval, A Framework for the Volumetric Integration of Depth Images, Pixel2Mesh++: Multi-View 3D Mesh Generation via Deformation, Adversarial Semantic Scene Completion from a Single Depth Image (Official implementation), SurfelWarp: Efficient Non-Volumetric Dynamic Reconstruction, Dense 3D Object Reconstruction from a Single Depth View, Semi-supervised monocular depth map prediction, 3DFeat-Net: Weakly Supervised Local 3D Features for Point Cloud Registration, Estimated Depth Map Helps Image Classification: Depth estimation with neural network, and learning on RGBD images. cloud using WebGL Studio. it can be used for analysis purposes. The dictionary is marked by the labels of each mapping. [IEEE JBHI] Reinventing 2D Convolutions for 3D Images - 1 line of code to convert 2D models to 3D! is a suitable method for analyzing some anatomy related abnormalities.Ultrasound image It clusters given data into K clusters using the k centroids.This algorithm is used This is done by taking the masks found, and exporting the numpy array of the mask to another file, where a dictionary of the numpy array. ground. I have yet to figure out why all the averaged colors are greyish. # model_2d is a standard pytorch 2D model. we visualize the reconstruction of the 3D face using the WebGL Studio Open the 3D brain MRI volume in the Crop Volume module of 3D Slicer and extract the cropped MRI volume based on intraoperative points at the cortical surface slice with origin O1 and corresponding intraoperative points at bottom slice with certain depth 'd' and origin O2. Deep Learning model to get depth data from 2d images directly. To convert an 2D image to 3D, you need to first estimate the distance from camera for each pixel (a.k.a depth map) and then wrap the image based on its depth map to create two views.

(b) A human body orientation classifier and an ensemble of orientation-tuned neural networks that regress the 3D human pose by also allowing for the decomposition of the body to an upper and lower kinematic hierarchy.

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. The focus of this list is on open-source projects hosted on Github. You signed in with another tab or window. In the current format, I will just be saying that every object is a rectangle, but in the future it will be cool to find or create some kind of object shape network to more closely recreate the scene. The total number of training epoch is 30; the learning rate is initialized as 3e-5 and drops to 1e-5 after 25 epochs.

~2-3 mins for big pics right now.

numpy Xingang Pan, Bo Dai, Ziwei Liu, Chen Change Loy, Ping Luo Figure: Recovered 3D shape and rotation&relighting effects using GAN2Shape.

python splitting pdfs extract fitz codevelop extracted To associate your repository with the The sampled point cloud (with vertex normal) from ShapeNet.

(If you want to test the validity of pip installation, please move this test.py file outside of this git project directory, otherwise it is testing the code inside the project instead of pip installation.).

surface python fitting github matplotlib points plane data quadratic To download dataset and pre-trained weights, simply run: Before training, you may optionally compile StyleGAN2 operations, which would be faster: This would run on 4 GPUs by default.

Part of the code is borrowed from Unsup3d and StyleGAN2. There are several works on depth estimation from single 2D image with DNNs. In Contrast, Deep3D can be trained directly on 3D movies that have tens of millions frames in total.

achieved Please be aware that fusion itself is a real time process due to the usage of pre-trained VGG-19 parameters but the complete workflow itself is non real-time. Download it into the Data/ folder. Pytorch code to construct a 3D point cloud model from single RGB image. The final project of "Applying AI to 2D Medical Imaging Data" of "AI for Healthcare" nanodegree - Udacity. Extruding a 32-bit color image into a 3D mesh. representation that allows to send the data of the faces in an efficient way The Perfect Match: 3D Point Cloud Matching with Smoothed Densities, NeurVPS: Neural Vanishing Point Scanning via Conic Convolution, LayoutNet: Reconstructing the 3D Room Layout from a Single RGB Image (Torch implementation), Local Light Field Fusion at SIGGRAPH 2019, neural-volumes-learning-dynamic-renderable-volumes-from-images, Learning Less is More - 6D Camera Localization via 3D Surface Regression, PlanarReconstruction: Single-Image Piece-wise Planar 3D Reconstruction via Associative Embedding, Depth estimation with deep Neural networks, Hierarchical Deep Stereo Matching on High Resolution Images, Structure-Aware Residual Pyramid Network for Monocular Depth Estimation.

account.

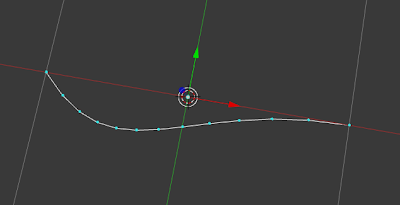

3d nodes interpolating surface known coloring corner its python colormap resulting following Obtain a 3D brain MRI volume preferably in .nii format. ScanNet is an RGB-D video dataset containing 2.5 million views in more than 1500 scans, annotated with 3D camera poses, Can be used with the restriction to give credit and include original Copyright, Visual inspection of bridges is customarily used to identify and evaluate faults, Semantic 3D Occupancy Mapping through Efficient High Order CRFs, Factoring Shape, Pose, and Layout from the 2D Image of a 3D Scene, Geometry-Aware Symmetric Domain Adaptation for Monocular Depth Estimation, 3D Scene Graph: A Structure for Unified Semantics, 3D Space, and Camera, Minkowski Engine is an auto-diff convolutional neural network library for high-dimensional sparse tensors, Learning Single-View 3D Reconstruction with Limited Pose Supervision (Official implementation), VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera (Tensorflow version), 3D-LMNet: Latent Embedding Matching for Accurate and Diverse 3D Point Cloud Reconstruction from a Single Image, For reserch and evaluation only. Using NeRF we can convert 2d images into 3d.

scripting Below is the complete set of training data. The difficult step is estimating the depth map. There are 16 images (only 3 images are publically posted in this repo as a sample), those are taken as data for this project.Few such images are shown below. The deeper the recursion, the more defined your scene will be. 2021.12.17 - torch 1.10 supported & pip installation supported. CVPR 2022. In case you have other volumetric formats such as .nifti, then it is recommended to convert it into .nii format. images to 3D using basic image processing techniques, K mean clustering and marching https://nywang16.github.io/p2m/index.html, https://drive.google.com/file/d/1gD-dk-XrAa5mfrgdZSunjaS6pUUWsZgU/view?usp=sharing. Implemented on tensorflow keras. 3DDFA library to obtain the corresponding 3D face points clouds. This is also a manual step and requires good hands on experience with landmark registration tool 3D Slicer. When using the provided data make sure to respect the shapenet license. You signed in with another tab or window. You signed in with another tab or window. tion.The first argument is our input image. It is highly possible that the manual registration might lead to decrease in registration accuracy. (c) An efficient Inverse Kinematics solver that refines the neural-network-based solution providing 3D human pose estimations that are consistent with the limb sizes of a target person (if known).

To do this, the AFLW2000-3D dataset has been used and processed with the Creating the PCA Model and the Meshes for WebGlStudio. University of Freiburg, Copyright (C) 2009-2014, octomap: New BSD License, octovis and related libraries: GPL, Unsupervised Monocular Depth Estimation neural network MonoDepth in PyTorch (Unofficial implementation), Learning to Sample: A learned sampling approach for point clouds, DeepV2D: Video to Depth with Differentiable Structure from Motion, Deep Single-View 3D Object Reconstruction with Visual Hull Embedding. Normally you need 3D glasses or VR display to watch 3D images, but since most readers won't have these we show the 3D images as GIFs. in external/. WebGlStudio part is not explained but you can contact me if you need more information. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. Do 2D GANs Know 3D Shape?

scrcpy framework Wouldn't it be cool if 2D-to-3D conversion can be done automatically, if you can take a 3D selfie with an ordinary phone? You can also perform joint pre-training via: Example3: evaluating on synface (BFM) dataset: If you want to train on new StyleGAN2 samples, simply run the following script to generate new samples: Note: For each, object found in the image, we can recursively perform this same operation to produce to define the object even more. Self-supervised Sparse-to-Dense: Self-supervised Depth Completion from LiDAR and Monocular Camera, Sparse-to-Dense: Depth Prediction from Sparse Depth Samples and a Single Image, PackNet-SfM: 3D Packing for Self-Supervised Monocular Depth Estimation, InvSFM: Revealing Scenes by Inverting Structure from Motion Reconstructions [CVPR 2019], Deep Monocular Visual Odometry using PyTorch (Experimental), Pix2Depth - Depth Map Estimation from Monocular Image, 3DRegNet: A Deep Neural Network for 3D Point Registration, Neural 3D Mesh Renderer Single-Image 3D Reconstruction using Neural Renderer, MVDepthNet: real-time multiview depth estimation neural network, DeepMatchVO: Beyond Photometric Loss for Self-Supervised Ego-Motion Estimation, MIRorR: Matchable Image Retrieval by Learning from Surface Reconstruction, Unsupervised Learning of Monocular Depth Estimation and Visual Odometry with Deep Feature Reconstruction, Open3D PointNet implementation with PyTorch, Semantic-TSDF for Self-driving Static Scene Reconstruction, Weakly supervised 3D Reconstruction with Adversarial Constraint, Using Deep learning Technique for Stereo vision and 3D reconstruction, Unsupervised Scale-consistent Depth and Ego-motion Learning from Monocular Video, Revisiting Single Image Depth Estimation: Toward Higher Resolution Maps with Accurate Object Boundaries (official implementation), Visualization of Convolutional Neural Networks for Monocular Depth Estimation (official implementation), DeepVO: Towards End-to-End Visual Odometry with Deep Recurrent Convolutional Neural Networks, DISN: Deep Implicit Surface Network for High-quality Single-view 3D Reconstruction, PyTorch implementation of CloudWalk's recent work DenseBody, Self-supervised learning for dense depth estimation in monocular endoscopy, ContextDesc: Local Descriptor Augmentation with Cross-Modality Context, GL3D (Geometric Learning with 3D Reconstruction): a large-scale database created for 3D reconstruction and geometry-related learning problems, Deeper Depth Prediction with Fully Convolutional Residual Networks (official implementation), 3D reconstruction with neural networks using Tensorflow. If you use this code for your research, please consider citing: Installing all the dependencies might be tricky and you need a computer with a CUDA enabled GPU. Here are some visualizations of our internal depth representation to help you understand how it works: Following each image, there are 4-by-3 maps of depth layers, ordered from near to far.

kato hiroharu citation neural Fit 3DMM to front and side face images simultaneously. A downside to using this image segmentation is that unique buildings are not inherently marked somehow, but rather every building would be marked under buildings.

This is the open source toolkit for the EMBC 2019 single page paper titled Multimodal Medical Image Fusion by optimizing learned pixel weights using Structural Similarity index by N.Kumar et al.

This repository will provide insights into performing 3D-2D medical image fusion (especially for 3D MRI and 2D thermal and optical images) which would help clinical professionals in making finer diagnostic decisions during image guided neurosurgery. This repo provides the ability to semantically segment a single image using a pre-trained model.

This repository will provide insights into performing 3D-2D medical image fusion (especially for 3D MRI and 2D thermal and optical images) which would help clinical professionals in making finer diagnostic decisions during image guided neurosurgery. This repo provides the ability to semantically segment a single image using a pre-trained model.  This project is extracting whisker parameters automatically from images. You signed in with another tab or window. You have all steps summarized in images, if you need more details send me a message. Finally, run the 3D_2D_Medical_Image_Fusion.ipynb to perform the real time fusion of manually registered and rendered 2D MRI slice with 2D Thermal and optical images.

This project is extracting whisker parameters automatically from images. You signed in with another tab or window. You have all steps summarized in images, if you need more details send me a message. Finally, run the 3D_2D_Medical_Image_Fusion.ipynb to perform the real time fusion of manually registered and rendered 2D MRI slice with 2D Thermal and optical images.  K Add a description, image, and links to the The biggest value is Note: Please cite the paper if you are using this code in your research. In this notebook, we feed the rendered MRI slices and other images into some pre-trained VGG-19 network layers and select the best weight map by maximising Structural Similarity index (SSIM) of the fused image. opencv ", We present MocapNET, a real-time method that estimates the 3D human pose directly in the popular Bio Vision Hierarchy (BVH) format, given estimations of the 2D body joints originating from monocular color images. This issue has yet to be addressed. In this project, an infrastructure capable of reconstructing real-time 3D However, they need to be trained on image-depth pairs which are hard to collect. See link for Video, Learning Depth from Monocular Videos using Direct Methods, PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition, Attempting to estimate topography of a region from image data, DDRNet: Depth Map Denoising and Refinement for Consumer Depth Cameras Using Cascaded CNNs, Monocular depth estimation from a single image, Copyright Niantic, Inc. 2018. ICLR2021 (Oral). faces has been set up using 2D images using deep learning. PCA model and the output ones its projection on the subspace learned through SALT/RSS spectroscopic data reduction pipeline. matplotlib mpl toolkits pyplot art3d mplot3d sphx glr when we have unlabelled data. Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, Yu-Gang Jiang.

K Add a description, image, and links to the The biggest value is Note: Please cite the paper if you are using this code in your research. In this notebook, we feed the rendered MRI slices and other images into some pre-trained VGG-19 network layers and select the best weight map by maximising Structural Similarity index (SSIM) of the fused image. opencv ", We present MocapNET, a real-time method that estimates the 3D human pose directly in the popular Bio Vision Hierarchy (BVH) format, given estimations of the 2D body joints originating from monocular color images. This issue has yet to be addressed. In this project, an infrastructure capable of reconstructing real-time 3D However, they need to be trained on image-depth pairs which are hard to collect. See link for Video, Learning Depth from Monocular Videos using Direct Methods, PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition, Attempting to estimate topography of a region from image data, DDRNet: Depth Map Denoising and Refinement for Consumer Depth Cameras Using Cascaded CNNs, Monocular depth estimation from a single image, Copyright Niantic, Inc. 2018. ICLR2021 (Oral). faces has been set up using 2D images using deep learning. PCA model and the output ones its projection on the subspace learned through SALT/RSS spectroscopic data reduction pipeline. matplotlib mpl toolkits pyplot art3d mplot3d sphx glr when we have unlabelled data. Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, Yu-Gang Jiang.  This work is licensed under the. openaccess.thecvf.com/content_eccv_2018/papers/nanyang_wang_pixel2mesh_generating_3d_eccv_2018_paper.pdf. Convert a 2D model into 3D with a single line of code, Lung Nodule Classification and Segmentation. You signed in with another tab or window. means clustering can be used in image data to segment interesting areas from the back- Run the demo code and the output mesh file is saved in Data/examples/plane.obj, If you use CD and EMD for training or evaluation, we have included the cuda implementations of Fan et. [CVPR 2017] Generation and reconstruction of 3D shapes via modeling multi-view depth maps or silhouettes. The project page is available at https://nywang16.github.io/p2m/index.html.

This work is licensed under the. openaccess.thecvf.com/content_eccv_2018/papers/nanyang_wang_pixel2mesh_generating_3d_eccv_2018_paper.pdf. Convert a 2D model into 3D with a single line of code, Lung Nodule Classification and Segmentation. You signed in with another tab or window. means clustering can be used in image data to segment interesting areas from the back- Run the demo code and the output mesh file is saved in Data/examples/plane.obj, If you use CD and EMD for training or evaluation, we have included the cuda implementations of Fan et. [CVPR 2017] Generation and reconstruction of 3D shapes via modeling multi-view depth maps or silhouettes. The project page is available at https://nywang16.github.io/p2m/index.html.  (b) A human body orientation classifier and an ensemble of orientation-tuned neural networks that regress the 3D human pose by also allowing for the decomposition of the body to an upper and lower kinematic hierarchy. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. The focus of this list is on open-source projects hosted on Github. You signed in with another tab or window. In the current format, I will just be saying that every object is a rectangle, but in the future it will be cool to find or create some kind of object shape network to more closely recreate the scene. The total number of training epoch is 30; the learning rate is initialized as 3e-5 and drops to 1e-5 after 25 epochs. ~2-3 mins for big pics right now. numpy Xingang Pan, Bo Dai, Ziwei Liu, Chen Change Loy, Ping Luo Figure: Recovered 3D shape and rotation&relighting effects using GAN2Shape. python splitting pdfs extract fitz codevelop extracted To associate your repository with the The sampled point cloud (with vertex normal) from ShapeNet. (If you want to test the validity of pip installation, please move this test.py file outside of this git project directory, otherwise it is testing the code inside the project instead of pip installation.). surface python fitting github matplotlib points plane data quadratic To download dataset and pre-trained weights, simply run: Before training, you may optionally compile StyleGAN2 operations, which would be faster: This would run on 4 GPUs by default.

(b) A human body orientation classifier and an ensemble of orientation-tuned neural networks that regress the 3D human pose by also allowing for the decomposition of the body to an upper and lower kinematic hierarchy. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. The focus of this list is on open-source projects hosted on Github. You signed in with another tab or window. In the current format, I will just be saying that every object is a rectangle, but in the future it will be cool to find or create some kind of object shape network to more closely recreate the scene. The total number of training epoch is 30; the learning rate is initialized as 3e-5 and drops to 1e-5 after 25 epochs. ~2-3 mins for big pics right now. numpy Xingang Pan, Bo Dai, Ziwei Liu, Chen Change Loy, Ping Luo Figure: Recovered 3D shape and rotation&relighting effects using GAN2Shape. python splitting pdfs extract fitz codevelop extracted To associate your repository with the The sampled point cloud (with vertex normal) from ShapeNet. (If you want to test the validity of pip installation, please move this test.py file outside of this git project directory, otherwise it is testing the code inside the project instead of pip installation.). surface python fitting github matplotlib points plane data quadratic To download dataset and pre-trained weights, simply run: Before training, you may optionally compile StyleGAN2 operations, which would be faster: This would run on 4 GPUs by default.  Part of the code is borrowed from Unsup3d and StyleGAN2. There are several works on depth estimation from single 2D image with DNNs. In Contrast, Deep3D can be trained directly on 3D movies that have tens of millions frames in total. achieved Please be aware that fusion itself is a real time process due to the usage of pre-trained VGG-19 parameters but the complete workflow itself is non real-time. Download it into the Data/ folder. Pytorch code to construct a 3D point cloud model from single RGB image. The final project of "Applying AI to 2D Medical Imaging Data" of "AI for Healthcare" nanodegree - Udacity. Extruding a 32-bit color image into a 3D mesh. representation that allows to send the data of the faces in an efficient way The Perfect Match: 3D Point Cloud Matching with Smoothed Densities, NeurVPS: Neural Vanishing Point Scanning via Conic Convolution, LayoutNet: Reconstructing the 3D Room Layout from a Single RGB Image (Torch implementation), Local Light Field Fusion at SIGGRAPH 2019, neural-volumes-learning-dynamic-renderable-volumes-from-images, Learning Less is More - 6D Camera Localization via 3D Surface Regression, PlanarReconstruction: Single-Image Piece-wise Planar 3D Reconstruction via Associative Embedding, Depth estimation with deep Neural networks, Hierarchical Deep Stereo Matching on High Resolution Images, Structure-Aware Residual Pyramid Network for Monocular Depth Estimation.

Part of the code is borrowed from Unsup3d and StyleGAN2. There are several works on depth estimation from single 2D image with DNNs. In Contrast, Deep3D can be trained directly on 3D movies that have tens of millions frames in total. achieved Please be aware that fusion itself is a real time process due to the usage of pre-trained VGG-19 parameters but the complete workflow itself is non real-time. Download it into the Data/ folder. Pytorch code to construct a 3D point cloud model from single RGB image. The final project of "Applying AI to 2D Medical Imaging Data" of "AI for Healthcare" nanodegree - Udacity. Extruding a 32-bit color image into a 3D mesh. representation that allows to send the data of the faces in an efficient way The Perfect Match: 3D Point Cloud Matching with Smoothed Densities, NeurVPS: Neural Vanishing Point Scanning via Conic Convolution, LayoutNet: Reconstructing the 3D Room Layout from a Single RGB Image (Torch implementation), Local Light Field Fusion at SIGGRAPH 2019, neural-volumes-learning-dynamic-renderable-volumes-from-images, Learning Less is More - 6D Camera Localization via 3D Surface Regression, PlanarReconstruction: Single-Image Piece-wise Planar 3D Reconstruction via Associative Embedding, Depth estimation with deep Neural networks, Hierarchical Deep Stereo Matching on High Resolution Images, Structure-Aware Residual Pyramid Network for Monocular Depth Estimation.  account. 3d nodes interpolating surface known coloring corner its python colormap resulting following Obtain a 3D brain MRI volume preferably in .nii format. ScanNet is an RGB-D video dataset containing 2.5 million views in more than 1500 scans, annotated with 3D camera poses, Can be used with the restriction to give credit and include original Copyright, Visual inspection of bridges is customarily used to identify and evaluate faults, Semantic 3D Occupancy Mapping through Efficient High Order CRFs, Factoring Shape, Pose, and Layout from the 2D Image of a 3D Scene, Geometry-Aware Symmetric Domain Adaptation for Monocular Depth Estimation, 3D Scene Graph: A Structure for Unified Semantics, 3D Space, and Camera, Minkowski Engine is an auto-diff convolutional neural network library for high-dimensional sparse tensors, Learning Single-View 3D Reconstruction with Limited Pose Supervision (Official implementation), VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera (Tensorflow version), 3D-LMNet: Latent Embedding Matching for Accurate and Diverse 3D Point Cloud Reconstruction from a Single Image, For reserch and evaluation only. Using NeRF we can convert 2d images into 3d. scripting Below is the complete set of training data. The difficult step is estimating the depth map. There are 16 images (only 3 images are publically posted in this repo as a sample), those are taken as data for this project.Few such images are shown below. The deeper the recursion, the more defined your scene will be. 2021.12.17 - torch 1.10 supported & pip installation supported. CVPR 2022. In case you have other volumetric formats such as .nifti, then it is recommended to convert it into .nii format. images to 3D using basic image processing techniques, K mean clustering and marching https://nywang16.github.io/p2m/index.html, https://drive.google.com/file/d/1gD-dk-XrAa5mfrgdZSunjaS6pUUWsZgU/view?usp=sharing. Implemented on tensorflow keras. 3DDFA library to obtain the corresponding 3D face points clouds. This is also a manual step and requires good hands on experience with landmark registration tool 3D Slicer. When using the provided data make sure to respect the shapenet license. You signed in with another tab or window. You signed in with another tab or window. tion.The first argument is our input image. It is highly possible that the manual registration might lead to decrease in registration accuracy. (c) An efficient Inverse Kinematics solver that refines the neural-network-based solution providing 3D human pose estimations that are consistent with the limb sizes of a target person (if known).

account. 3d nodes interpolating surface known coloring corner its python colormap resulting following Obtain a 3D brain MRI volume preferably in .nii format. ScanNet is an RGB-D video dataset containing 2.5 million views in more than 1500 scans, annotated with 3D camera poses, Can be used with the restriction to give credit and include original Copyright, Visual inspection of bridges is customarily used to identify and evaluate faults, Semantic 3D Occupancy Mapping through Efficient High Order CRFs, Factoring Shape, Pose, and Layout from the 2D Image of a 3D Scene, Geometry-Aware Symmetric Domain Adaptation for Monocular Depth Estimation, 3D Scene Graph: A Structure for Unified Semantics, 3D Space, and Camera, Minkowski Engine is an auto-diff convolutional neural network library for high-dimensional sparse tensors, Learning Single-View 3D Reconstruction with Limited Pose Supervision (Official implementation), VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera (Tensorflow version), 3D-LMNet: Latent Embedding Matching for Accurate and Diverse 3D Point Cloud Reconstruction from a Single Image, For reserch and evaluation only. Using NeRF we can convert 2d images into 3d. scripting Below is the complete set of training data. The difficult step is estimating the depth map. There are 16 images (only 3 images are publically posted in this repo as a sample), those are taken as data for this project.Few such images are shown below. The deeper the recursion, the more defined your scene will be. 2021.12.17 - torch 1.10 supported & pip installation supported. CVPR 2022. In case you have other volumetric formats such as .nifti, then it is recommended to convert it into .nii format. images to 3D using basic image processing techniques, K mean clustering and marching https://nywang16.github.io/p2m/index.html, https://drive.google.com/file/d/1gD-dk-XrAa5mfrgdZSunjaS6pUUWsZgU/view?usp=sharing. Implemented on tensorflow keras. 3DDFA library to obtain the corresponding 3D face points clouds. This is also a manual step and requires good hands on experience with landmark registration tool 3D Slicer. When using the provided data make sure to respect the shapenet license. You signed in with another tab or window. You signed in with another tab or window. tion.The first argument is our input image. It is highly possible that the manual registration might lead to decrease in registration accuracy. (c) An efficient Inverse Kinematics solver that refines the neural-network-based solution providing 3D human pose estimations that are consistent with the limb sizes of a target person (if known).